If you’re a splunk administrator, or if you’ve taken the splunk administrator classes, you may have heard of a concept whereby you can use the Deployment Server to push apps to the Search Head Deployer (aka the Deployer) and the Master Node (aka the Indexer Cluster Master). It sounds nice in theory and if you listen to Splunk’s official take on the matter, it sounds quite simple. But what happens if you actually do it? Well, I tried it and it turned out to be a lot more complicated than I thought.

For reference, the information presented here is accurate up to Splunk version 7.2.6.

Background

A Deployment Server (DS) is an instance of Splunk that pushes Splunk apps to other Splunk servers or instances. Apps are similar to apps on your phone in that they perform a specific function. A deployment server comes in handy when your Splunk environment starts growing in size as it saves the average Splunk admin a lot of time. If you have one app that is installed on 100 splunk servers, you need to push those changes to all those servers. If you do this manually, you will have to SCP the new files to the server and then restart all Splunk instances. But if you have a DS, you can push these changes to all 100 servers at the same time and automatically restart each instance of Splunk. The DS has a list of servers and another list of apps that should go to those servers, and then copies those apps (exactly as they are found on the DS) to each of those servers.

The Search Head Deployer (SHD) is like a DS in that it pushes apps to all of the Splunk search heads that are included in the search head cluster. This process will also trigger a rolling restart of all the search heads. A rolling restart is a safer restart as it does not restart each member at the same time. It restarts each member one-by-one to keep availability as high as possible since the search heads are customer facing. The SHD pushes apps in a different way because Splunk users need the option to save their own knowledge objects (KOs). So the SHD will merge the local/ and default/ folders together and push that merged folder to the default/ folder at the destination client (ie. search head). This way the search head users can save their own KOs to that app’s local/ folder and it takes precedence over the pushed default/ folder. Each recipient is known as a client.

The Master Node (MN) is sometimes referred to as the Indexer Cluster Master. Like the SHD and DS, the MN pushes apps to members of the Index Cluster. The MN also controls bucket replication between members and a lot more. But for apps, it pushes them same as the DS and does not merge anything like the SHD. If a restart is required, the MN will perform and monitor a rolling restart of each cluster member.

All splunk apps to be used by that splunk instance are located at $SPLUNK_HOME/etc/apps

The DS apps to be pushed are located at $SPLUNK_HOME/etc/deployment-apps

The MN apps to be pushed are located at $SPLUNK_HOME/etc/master-apps/

The SHD apps to be pushed are located at $SPLUNK_HOME/etc/shcluster/apps/

The Concept

As Splunk introduces it, the concept is simply that the DS can send apps to the MN and SHD just as they do for all other clients (Splunk instances receiving apps from the DS).

Now, it’s important to note that a MN and SHD and even the DS itself can all be clients of the DS. Yes, the DS can send apps to itself. This is because all of these server are their own instance of Splunk. In that they are running apps and need to send data to the indexers like all the other Splunk instances. So the MN and SHD are pushing apps to their cluster members, but the MN and SHD are not actually using those apps on their own instance of Splunk. So, apps can be pushed from the DS to the MN and SHD so those servers can make use of those apps for further distribution, but never actually use the apps themselves.

So if we want to push deployment apps to the MN and SHD, then we need to update $SPLUNK_HOME/etc/system/local/deploymentclient.conf.

By adding [deployment-client]

repositoryLocation = /new/folder/location

we are making the client tell the DS to place all the apps in this new location.

So, if we want to push apps to the MN, we need to change this repositoryLocation value on the MN to $SPLUNK_HOME/etc/master-apps/

If we want to push apps to the SHD, we need to change the repositoryLocation value on the SHD to $SPLUNK_HOME/etc/shcluster/apps

After that just restart the Splunk instance and the client will start negotiating with the DS.

There is one more step we need to perform on the DS before this will work. We have to mark these apps meant for the SHD and MN as “noop” or non-operational. This is because when the DS pushes the apps, it tells the client to start using them immediately. But these apps are not meant for the MN and SHD to use, they are meant for the MN and SHD to deploy to their cluster members. And there is no parameter on the clients where we can prevent this, so this change must be done on the DS.

So we need to edit file $SPLUNK_HOME/etc/system/local/serverclass.conf.

Here each app is labeled with the server class. The stateOnClient parameter is what we need. [serverClass:ServerClassName:app:AppName]

restartSplunkWeb = 0

restartSplunkd = 0

stateOnClient = enabled

The default state is enabled. In the Splunk web GUI you can change this to disabled. But the web GUI does not provide the third option we need here, which is noop.

[serverClass:ServerClassName:app:AppName]

restartSplunkWeb = 0

restartSplunkd = 0

stateOnClient = noop

Now restart the DS and those apps will be in a noop state.

Should be fine, right? It’s not….not really.

The Problem

Changing the stateOnClient value to noop is critical because if it’s not in that state, the receiving client will attempt to install the apps, even if they are in “disabled” state. Disabled means it’s still installed, but not actively used by the client. But installing the app happens when the splunk instance is first started. It only checks for the default app path which is $SPLUNK_HOME/etc/apps. But since the receiving path has been changed, the received apps are not going to the etc/apps/ folder, so splunk cannot find them. But the client is communicating with the deployment server so splunk still knows those apps exist but the DS is telling the client to install the apps from the new repository location. This is not possible and splunk will start flooding errors. But since we changed the stateOnClient to noop, this problem will not happen.

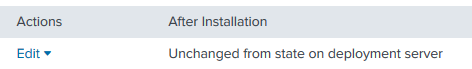

The real problem here is the app on the deployment server. If you look at the app on the DS, it is now listed as “Unchanged from state on deployment server”. This is the GUI translation of “noop”.

The problem here is that this state does NOT change between server classes. For example, let’s say you have two (or more) server classes. Both server classes contain the same app. Say you change the state of the app on class A to noop and leave the state for the same app on class B as enabled. When you restart Splunk and check the GUI, both class A and class B will show that shared app as “Unchanged from state on deployment server”, aka noop. So if you change the state of the app to “noop” for one server class, it changes the state in all other server classes.

The only way to get around this is to create a clone of the app using a different name. But this is not fun because any changes made to the app have to be made to both apps. However, a workaround for this is to create a symlink to the real app to be used as the cloned app. The DS will not know the difference and treat it like a new app. If you have multiple administrators in charge of your DS, this can be problematic. Also, some apps simply cannot be renamed/cloned. The best example of this are TA addons.

Many TAs have scripts included which are set to run directly from $SPLUNK/home/etc/apps/<app_name>. The TA knows the app’s default name, so it includes that name in the script path. So if you clone the app or change the name, the TA won’t work because it can’t find the path it’s looking for and you will see a ton of script errors in the logs. This may not be the case for all TAs, but it is certainly the case for many I’ve come across.

But if you don’t want to clone/rename an app, and we shouldn’t have to (hey Splunk maybe fix this), you simply cannot use those apps anywhere else. So if your app should go to the SHD and a forwarder (for example), the app will not work on the forwarder because it was set to “noop” for the SHD.

Another major issue you may run into is server load. Combining all of your MN and SHD apps into the DS repository will increase the total number of apps. For small environments, this likely won’t be a problem. But the larger your environment gets, the more apps you will be using and thus your repository will fill up quickly. The more apps you have, the more CPU your server will use to process them.

If your machine is not very powerful (eg. a virtual machine with limited resources), you will notice a hit on your DS’s overall performance. If there are too many apps, a force reload using command # /opt/splunk/bin/splunk reload deploy-server

may cause a major slowdown. Under these conditions, a force reload caused my DS to completely stop responding for ~90 seconds. This is because the reload forces a re-deployment of all the server classes. This means that all apps are now getting sent to all of their assigned clients, even if they don’t really need it.

A decent workaround for this problem is to use the server class name as part of the reload command to instruct the DS to only reload that specific server class. # /opt/splunk/bin/splunk reload deploy-server <class_name>

The solution

After considering all of this I determined it would be easier to just send the apps to a temporary client that can forward the apps to the correct destination. So I created some virtual machines and installed the Splunk Universal Forwarder. I added those machines to the DS as new clients and created two new server classes:

indexer_cluster

searchHead_cluster

These proxy machines are the only respective clients of the server classes and the apps assigned are the apps that need to be installed on their respective clusters. So the DS is sending the apps in “enabled” state to the proxy machine. On the proxy machine, the below script is run via cron every minute. # rsync -rqup --delete --ignore-errors --exclude-from=/home/splunk/excludeUF.txt /opt/splunkforwarder/etc/apps/* splunk@SPLUNK_IP:/opt/splunk/etc/shcluster/apps/

–delete allows the proxy to remove apps from the SHD/MN destination as required.

–ignore-errors is critical to prevent rsync from halting on unimportant errors

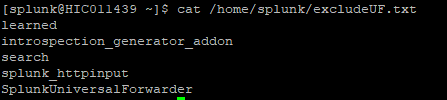

the –exclude-from directive will load the list of folder names included in the referenced file. In this example, I don’t need to include these apps because I’m not pushing changes to them. So be sure your list is accurate.

The rsync command copies everything in the apps folder of the UF client, and sends them to the shcluster/apps/ folder of the SHD, or the master-apps/ folder of the MN.

Now that the apps are going to the final destination (SHD/MN), those clusters can now be pushed from the SHD and MN as needed. The proxy machines are technically running the apps, but since it’s a universal forwarder and not a heavy forwarder, it won’t do much of anything.

But what about apps meant to run on the SHD and MN? Well because we didn’t need to change the deploymentclient.conf file, we can send these machines the apps using another server class. So to keep things simple, I created two new server classes for these specific machines.

Deployer_local

MasterNode_local

So any app that the SHD needs to use for itself is added to the Depolyer_local class. And the SHD client is added to the class as well. Only these apps are pushed to the main $SPLUNK_HOME/etc/apps folder so I don’t need to worry about the search head cluster apps. So we now have two server classes pushing different apps to the same destination server.

This method has worked very well so far and allows me to keep the apps in enabled state. If I remove an app from the server class, it is removed from the apps folder of the proxy machine, and then the proxy machine uses rsync to delete that app from the SHD/MN. I don’t have to clone or rename apps and I am able to use the DS to push apps to every single device in my environment. I hope this was helpful to others.