This next section will focus on creating a distributed enterprise splunk environment. I will be using the latest version of splunk which is currently 8.0.5. While this will age out over time, most commands will be similar and should continue to work as time goes on. However, if something fails, google is your friend and splunk’s documentation should be able to bridge the gap.

While you don’t have to do this in too strict of an order so you are welcome to change things up. But if you want to learn as you go, I suggest you follow this plan.

Indexers

The indexers are the storage servers, so they need more storage space. Even if this is just for testing and you never use it, best to just provision it as needed.

Edit the VM and add 2 more hard disks. One 500 gb and the other 2000gb. You may not have that much space. but since this is a vm it wont allocate the space unless you tell it to do so. So you can just use the space you have and let the VM pretend it has that much space available.

Each indexer should get two more hard disks (totaling 4 disks). One disk will emulate HOT storage and the other COLD storage. this is not proper hot/cold storage, but the structure is only intended to demonstrate how it is used. If you really want to go hardcore, you can create WARM and FROZEN disks as well, but for this demo it’s overkill.

Restart the VMs to load the new disks into the OS. the should appear in the /dev folder.

Verify the volumes using command:

# lsblk

/dev/sdc should have 500gb and /dev/sdd should have 2000gb. if they are swapped, then you will need to adjust the fstab info below.

Use the previous chapter to initialize and partition the new disks. in the /etc/fstab file, name the mount points to reflect the storage destination.

# nano /etc/fstab

Once fstab is updated, we can now delete the mount points we created earlier. stop splunk and run these commands:

# /opt/splunk/bin/splunk stop

rm -rf /hot

rm -rf /cold

we only want to delete these mount points on the indexers.

Now reboot to activate the mount points. Be sure its perfect, a bad fstab will not boot.

License Manager

Since all our servers will be looking for a license, let’s configure the license server first. After this server is configured it will need to be running at all times.

Go to SETTINGS > LICENSING

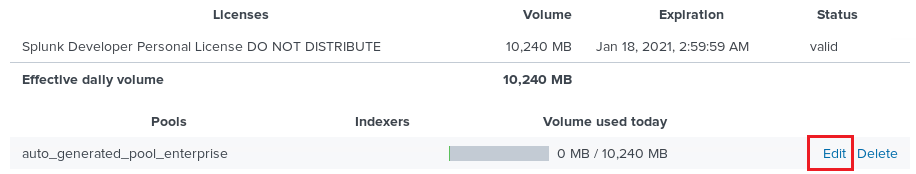

Click ADD LICENSE. if you are able to upload your DEV license click BROWSE.

But in most cases you will need to rely on VMware Tools so you can copy/paste the XML. Click the link to activate the text box so you can paste in the XML data from the license file.

Click INSTALL. It should prompt for a restart, so go ahead and click RESTART NOW.

When it returns you should see your license listed with max of 10GB of data and 6 months expiration.

Click the EDIT link for that license.

Ensure that any indexer that connects can use this license. Change this value if needed and click SUBMIT.

Activate license clients

Now let’s activate this license on all other splunk servers. FYI, with everything the CLI method is fastest, but I’ll show the GUI method as well.

GUI METHOD:

Login to the GUI of each server. Go to SETTINGS > LICENSING.

At the top, you will see the option to change the server to a slave device. Click this button

Use the 2nd option and add the IP address of the license manager, then append :8089 as shown below.

Click SAVE then RESTART NOW

CLI METHOD:

SSH to each server. use this command to designate the license manager

# /opt/splunk/bin/splunk edit licenser-localslave -master_uri https://192.168.11.15:8089 -auth admin:’splunkpass’

Change info in the above command as needed.

Then restart splunk to take effect.

To restart splunk, use command:

# /opt/splunk/bin/splunk restart

(keep this command handy, you will often need it for times I say to restart splunk)

Indexer Cluster

First we will configure the indexer nodes, and then the master node.

use the client machine and open the terminal. you can use one terminal window and multiple terminal tabs by right clicking the terminal and selecting NEW TAB.

ssh to all indexers and the master node.

run this command on all servers

# nano /opt/splunk/etc/system/local/server.conf

On the Master Node, paste in the below text at the end of the file

[clustering]

mode = master

replication_factor = 3

search_factor = 1

pass4SymmKey = thispassword

cluster_label = indexerCluster1A search factor of 2 is ideal but you need [repFactor] x [searchFactor] number of nodes to allow that. Since we have 3, we can do a 3×1. if you want to do a 2×2 you will need to create one additional indexer. but since search redundancy is not a big deal in dev, this will work.

thispassword can be whatever password you want. for this demo, we are keeping it simple. this is the indexer cluster password. write it down. there will be other passwords.

MASTERNODE is the IP address of the master node server. In prod you will want to use DNS. but since we didnt set that up, IP address will work just fine.

Be sure to use the INTERNAL IP ADDRESS (.11).

On the other 3 indexers, paste the following:

[clustering]

master_uri = https://MASTERNODE:8089

mode = slave

pass4SymmKey = thispassword

[replication_port://9887]

Save the server.conf file and exit.

Restart SPLUNK on the master node, and then restart splunk on the peer nodes.

Login to the Master Node web GUI and go to SETTINGS > INDEXER CLUSTERING and it should reveal that clustering is enabled and list each peer node.

Keep in mind this is the first time running things, so restarting splunk all at the same time or even the cluster all at once isn’t a bid deal right now. but normally, when everything is working correctly, only a limited amount of machines can be “down” at any single point.

indexes.conf

Now that the indexers and master node are working together, we need to make the indexes.conf file that will tell the indexers how to manage each index. But since we are using a distributed environment (as opposed to standalone), we need to distribute the indexes.conf file the right way.

So we are going to use the Master Node to push “apps” to the Indexer nodes. All splunk instances have the /opt/splunk/etc/master-apps folder, but that folder is only used by the Master Node. Anything found inside the master-apps folder will pushed to all members of the indexer cluster to the /opt/splunk/etc/slave-apps folder. Since we are doing this, we will also create an file to add some settings for all indexers.

ssh to the master node. go to the master-apps folder

# cd /opt/splunk/etc/master-apps

here we will create two folders.

# mkdir -p indexers_indexes_list/local

mkdir -p indexers_global_settings/local

Make the indexes.conf file.

# nano indexers_indexes_list/local/indexes.conf

Paste in this text and save

[volume:hot1]

path = /hot/splunk

maxVolumeDataSizeMB = 450000

[volume:cold1]

path = /cold/splunk

maxVolumeDataSizeMB = 1950000

[default]

repFactor = auto

homePath = volume:hot1/$_index_name/db

# warm located in hot volume

coldPath = volume:cold1/$_index_name/colddb

thawedPath = $SPLUNK_DB/$_index_name/thaweddb

# 1 year max

frozenTimePeriodInSecs = 31536000

[test]

maxTotalDataSizeMB = 1500

maxWarmDBCount = 20

## 2 months max for TEST index

frozenTimePeriodInSecs = 604800

[linux]

maxTotalDataSizeMB = 30000

maxDataSize = auto_high_volume

maxWarmDBCount = 200

maxMemMB=20

Lets have a look at this file while we’re here.

The first part is defining the path where splunk should store the data. this is the reason we made the /hot and /cold mount points for the indexers. splunk has 100gb of room to run the application, but we reserved the 500gb for hot/warm and 2000gb for cold storage. The maxVolumeDataSizeMB tells splunk the max size of the volume so it doesnt go over and potentially crash splunk. so you want to keep this number close to the actual max value but just a tad under.

The [default] database has the parameters ALL indexes will get by default unless you specify settings for a index with an individual stanza. this of this as inheritance. all indexes will inherit these settings first and then look for specific definitions later that will overwrite these settings.

Here we are saying all indexes use the same paths and we are using built-in macros to make life easy. so $index_name is a shortcut to an index like [firewall].

HOT1 and COLD1 were already defined in the previous stanzas.

We set frozenTimePeriodInSecs because this is often a global parameter. here we are saying the data stays in the cold storage for max of 1 year. after that it’s frozen. since we don’t have frozen, the data is deleted.

The test index is very important to have as a starter index. Since its for testing, we are defining this to use less storage than other important indexes. 1500 (MB) is per indexer.

maxWarmDBCount keeps a limit on the number of HOT/warm buckets in storage. this is helpful because if it’s not rolled to COLD quickly enough, it will fill up the HOT volume. this will be important for the large indexes. for test, we set frozenTimePeriodInSecs to expire after 2 months since the data is not meant to be kept like other indexes. we have to define these items here because otherwise they will be inherited from the default index settings.

Now we need to make an inputs file that tells the indexer to listen on port 9997

# nano indexers_global_settings/local/inputs.conf

Paste in this text and save

[splunktcp://9997]

disabled = 0this setting is going in the app “indexers_global_settings” because that app is intended for all of the indexers. so any general settings that need to get pushed to all indexers should go in this app/folder. If we wanted to update the web GUI, we could add a web.conf file and place additional settings there.

Pushing indexer bundles

Also known as “configuration bundle actions”. this process pushes the apps/folders stored in the master-apps folder on the master node to the slave-apps folder located on all of the indexers attached to the cluster.

login to the web GUI of the master node. go to SETTINGS > INDEXER CLUSTERING

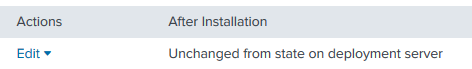

click the EDIT button and select CONFIGURATION BUNDLE ACTIONS

Click VALIDATE CHECK AND RESTART

it will then load all the apps in master-apps folder and run the configuration as if it were live and look for problems. if there is a problem it will return status FAILURE. in which case you likely have a typo somewhere you need to resolve. if it returns status SUCCESS, you are ok to push.

Click PUSH when ready to push to bundle. If a restart is required, it will perform a rolling restart where it restarts each member one-by-one. If you have enough members in the cluster, it will restart multiple members at the same time. But since we have 3, it will do one at a time.

For sanity, let’s see what happens when we have a bad validation. In the above indexes.conf file, i’ve changed the path of the disk.

Since the path does not exist it should fail at validation.

Validation returned UNSUCCESSFUL. To find out why, you can check the log on the Master Node at /opt/splunk/var/log/splunk/splunkd.log

The errors should also appear in the MESSAGES area of the GUI, but since these are low power VMs it might take a few minutes to arrive.

Search Head Deployer

The deployer pushes apps to the members in the search head cluster. This needs to be built before the search head cluster is created as you will see below.

Edit server.conf

# nano /opt/splunk/etc/system/local/server.conf

[shclustering]

pass4SymmKey = mySecretKey

shcluster_label = shcluster1Save and exit.

We also need to add this server to the indexer cluster as a search head. This will allow the Deployer to search if needed. Paste the below text into server.conf

[clustering]

master_uri = https://192.168.11.21:8089

mode = searchhead

pass4SymmKey = thispasswordSave, exit, and restart splunk.

Search Head Cluster

Now let’s build the search head cluster. For search heads, its best to have an ODD number of members and you need minimum of 3 for a cluster, so we have 3.

The search head cluster is not managed like the indexer cluster. the apps are distributed to the members in the same way as the master node distributes its apps to members, but the cluster itself is formed by the members alone. There is a captain member that performs additional tasks other members do not perform. This is the “manager” of sorts so we will designate SH1 as the captain and build accordingly.

SSH to all members and the Deployer

Review this command to run on ALL MEMBERS. This will initialize each member to prepare the member to be joined to a cluster.

# /opt/splunk/bin/splunk init shcluster-config -auth admin:’ADMINPASS‘ -mgmt_uri https://NEW_MEMBER_IP:8089/ -replication_port PORT -conf_deploy_fetch_url https://DEPLOYER_IP:8089/ -secret SECRET_KEY -shcluster_label LABEL_NAME

Where ADMINPASS is the admin password stored in the password safe

NEW_MEMBER_IP is the IP address (or DNS hostname) of the this search head member to be added

PORT is the unused TCP port, needs to be unique to that server, so use something like 9101 for server 1 and 9102 for server 2, etc..

DEPLOYER_IP is the IP address or DNS hostname of the Search Head Deployer

SECRET_KEY is the search head secret key

LABEL_NAME is the label for the search head cluster (used for monitoring) and needs to be the same for each member of that cluster.

These are the commands for all of our search heads

SH1

# /opt/splunk/bin/splunk init shcluster-config -auth admin:’splunkpass’ -mgmt_uri https://192.168.11.12:8089 -replication_port 9101 -replication_factor 2 -conf_deploy_fetch_url https://192.168.11.15:8089 -secret mySecretKey -shcluster_label shcluster1

SH2

# /opt/splunk/bin/splunk init shcluster-config -auth admin:’splunkpass’ -mgmt_uri https://192.168.11.13:8089 -replication_port 9102 -replication_factor 2 -conf_deploy_fetch_url https://192.168.11.15:8089 -secret mySecretKey -shcluster_label shcluster1

SH3

# /opt/splunk/bin/splunk init shcluster-config -auth admin:’splunkpass’ -mgmt_uri https://192.168.11.14:8089 -replication_port 9103 -replication_factor 2 -conf_deploy_fetch_url https://192.168.11.15:8089 -secret mySecretKey -shcluster_label shcluster1

Run the above 3 commands on the CORRECT search heads and restart splunk on each member.

On the member you wish to make captain, here is the command syntax:

# /opt/splunk/bin/splunk bootstrap shcluster-captain -servers_list “https://SH1_IP:8089,https://SH2_IP:8089,https://SH3_IP:8089″ -auth admin:’ADMINPASS‘

Where SH1,SH2,SH3 are the IPs of each member

ADMINPASS is the admin password for the splunk server you are using

The command should look like this:

# /opt/splunk/bin/splunk bootstrap shcluster-captain -servers_list “https://192.168.11.12:8089,https://192.168.11.13:8089,https://192.168.11.14:8089” -auth admin:’splunkpass’

The captain is now ready. You can view cluster status with this command:

# /opt/splunk/bin/splunk show shcluster-status -auth admin:’splunkpass’

Finally, run this command on all search head cluster members and the deployer. this command tells the splunk instance to associate with the indexer cluster as a search head. this allows the search head to pull specific data from the indexers, most importantly the list of available indexes.

# /opt/splunk/bin/splunk edit cluster-config -mode searchhead -master_uri https://MASTER_NODE:8089 -secret SECRET -auth admin:’ADMINPASS‘

Where MASTER_NODE is the IP address or DNS hostname of the Master Node (aka Indexer Cluster Master)

SECRET is the Indexer Cluster password

ADMINPASS is the admin password for the splunk server you are using

restart splunk, one at a time. from this point on, only one search head can be down at any point. More than one will break the cluster.

Login to the GUI of one of the search heads. go to SETTINGS > SEARCH HEAD CLUSTERING. You should see each member listed. This may take a few minutes since these are low power VMs.

We will finish with the forwarding tier in Part 3.